Publications

A list of my publications in reverse chronological order.

2026

-

Open-Source Machine Learning Computed Tomography Scan Segmentation for Spine Osteoporosis DiagnosticsAkshay Sankar , Michael R. Kann , Samuel Adida , and 13 more authorsNeurosurgery, 2026

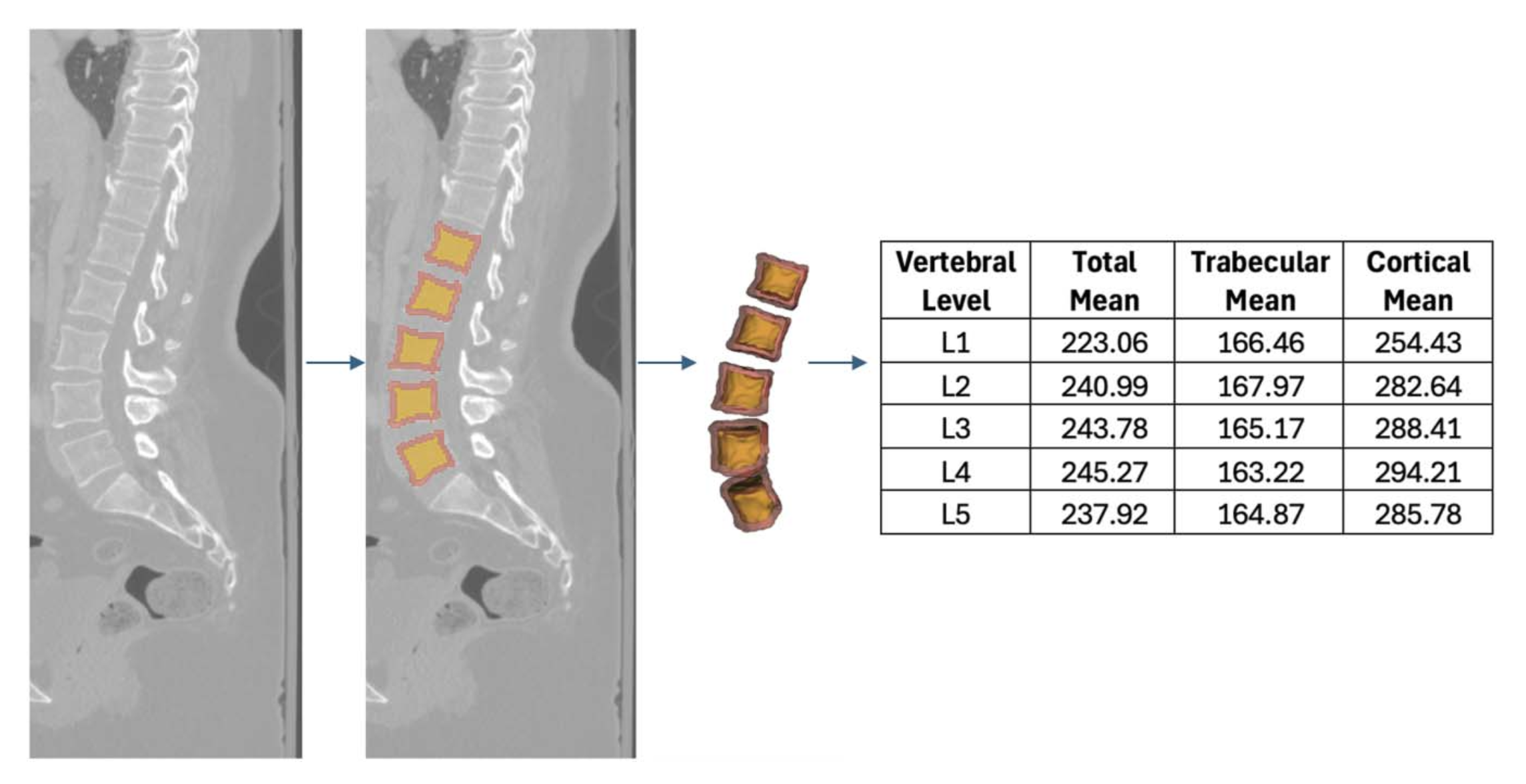

Open-Source Machine Learning Computed Tomography Scan Segmentation for Spine Osteoporosis DiagnosticsAkshay Sankar , Michael R. Kann , Samuel Adida , and 13 more authorsNeurosurgery, 2026BACKGROUND AND OBJECTIVES: Dual-energy x-ray absorptiometry (DXA) is the standard for assessing bone mineral density (BMD); however, its accuracy is limited by bone architecture, acquisition quality, and clinical context. Hounsfield units (HUs) offer an alternative for osteoporosis risk stratification. Machine learning (ML) models can segment computed tomography (CT) anatomy and integrate HU data to generate BMD metrics previously unavailable. This study elucidates the capabilities of an automated CT segmentation platform and investigates the relationship between vertebral HUs and DXA stratifications of BMD. METHODS: A retrospective analysis of 229 patients with lumbar CT and DXA scans within 1 year was performed. The TotalSegmentator ML model obtained segmentations of the lumbar spine which were integrated with CT radiographic data to compute volume (cm3) and HU density of vertebral bodies, trabecular bone, and cortical bone. Vertebral body HU means were compared against lumbar, hip, and femoral neck DXA T scores in healthy individuals (T-score > −1.0), patients with osteopenia (−1.0 ≥ T-score ≥ −2.5), and patients with osteoporosis (T-score < −2.5) . RESULTS: Patients (85.2% female) had a mean age of 71.02 \textpm 13.62 years and body mass index of 28.04 \textpm 7.51 kg/m2. Mean HUs from L1-L5 correlated with femoral neck (r = 0.54, P < .001), lumbar (r = 0.54, P < .001), and hip (r = 0.46, P < .001) DXA T-scores. Compared with osteopenic individuals, healthy individuals had higher L1-L5 total HU (265.0 vs 226.4, P < .001), trabecular HU (179.3 vs 136.5, P < .001), and cortical HU (312.0 vs 274.8, P < .001). The L1-L5 total, trabecular, and cortical bone were predictive for low BMD (area under the curve [AUC] = 0.77, AUC = 0.80, and AUC = 0.75) and osteoporosis (AUC = 0.79, AUC = 0.75, and AUC = 0.80), respectively. Youden Index analysis identified optimal trabecular and cortical bone threshold values of 141.3 HU and 254.2 HU for low BMD as well as 132.3 HU and 249.0 HU for osteoporosis, respectively. CONCLUSION: ML-driven CT segmentation correlates with DXA BMD stratifications and can provide a robust, consistent, and efficient assessment of HU density of critical vertebral structures.

@article{00006123-990000000-02028, author = {Sankar, Akshay and Kann, Michael R. and Adida, Samuel and Bhatia, Shovan and Shanahan, Regan M. and Colan, Jhair A. and Hurt, Griffin and Sharma, Nikhil and Kass, Nicolás M. and Hudson, Joseph S. and Agarwal, Nitin and Gerszten, Peter C. and Biehl, Jacob T. and Legarreta, Andrew and Andrews, Edward G. and McCarthy, David J.}, title = {Open-Source Machine Learning Computed Tomography Scan Segmentation for Spine Osteoporosis Diagnostics}, journal = {Neurosurgery}, year = {2026}, keywords = {Segmentation; Osteoporosis; Hounsfield units; DXA; Machine learning}, issn = {0148-396X}, url = {https://journals.lww.com/neurosurgery/fulltext/9900/open_source_machine_learning_computed_tomography.2028.aspx}, doi = {10.1227/neu.0000000000003928}, }

2025

-

Optimizing the Workflow of Superficial Temporal Artery Mapping in Extracranial-Intracranial Bypass Surgery Using Mixed Reality: A Proof-of-Concept StudyShovan Bhatia, Aaron Huynh , Regan M. Shanahan , and 10 more authorsWorld Neurosurgery, Dec 2025

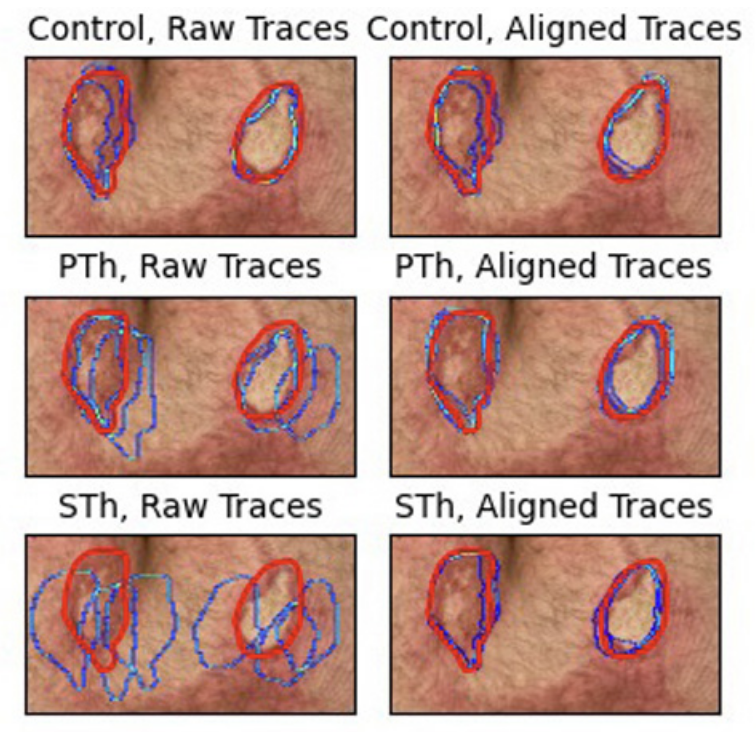

Optimizing the Workflow of Superficial Temporal Artery Mapping in Extracranial-Intracranial Bypass Surgery Using Mixed Reality: A Proof-of-Concept StudyShovan Bhatia, Aaron Huynh , Regan M. Shanahan , and 10 more authorsWorld Neurosurgery, Dec 2025Background Doppler ultrasound is the standard for mapping the superficial temporal artery (STA) during extracranial-intracranial (EC-IC) bypass surgery. Mixed reality (MR) offers a novel alternative by providing patient-specific anatomic overlays to better visualize the STA. This study aims to validate MR-guided STA mapping as a reliable preoperative planning tool in EC-IC bypass to the middle cerebral artery. Methods In this proof-of-concept study, 7 patients undergoing STA–middle cerebral artery bypass surgery were enrolled. Preoperative computed tomography angiograms were superimposed onto the patient using MEDIVIS SurgicalAR. Six standardized anatomic fiducial points were chosen for intraoperative registration. Five points along the parietal branch of the STA were annotated using MR and Doppler techniques. Point-to-point discrepancies between the MR and Doppler maps were analyzed, and map overlap was evaluated with computer vision techniques (OpenCV, Python). Significance was set at P < 0.05. Results MR mapping of the STA was faster than Doppler mapping (11.3 ± 1.47 vs. 67.2 ± 17.6 seconds; P = 0.001). When considering the time to register, the overall time for MR mapping was similar to Doppler (79.2 ± 39.8 vs. 67.2 ± 17.6 seconds; P = 0.78). Notably, MR outperformed Doppler at the distal segments of the STA (2.71 ± 0.91 vs. 20.90 ± 8.46 seconds; P < 0.001). Overall, the MR and Doppler maps demonstrated comparable alignment, with an average deviation of 4.46 ± 2.64 mm along the entire course of the mapped vessel. Conclusions MR provides comparable STA mapping accuracy to Doppler while reducing the mapping time. These findings suggest that the planned incision is unlikely to differ, providing early evidence for the feasibility of MR-guided mapping for EC-IC bypass procedures.

@article{BHATIA2025124562, title = {Optimizing the Workflow of Superficial Temporal Artery Mapping in Extracranial-Intracranial Bypass Surgery Using Mixed Reality: A Proof-of-Concept Study}, journal = {World Neurosurgery}, volume = {204}, pages = {124562}, year = {2025}, issn = {1878-8750}, doi = {https://doi.org/10.1016/j.wneu.2025.124562}, url = {https://www.sciencedirect.com/science/article/pii/S1878875025009209}, month = dec, author = {Bhatia, Shovan and Huynh, Aaron and Shanahan, Regan M. and Kann, Michael R. and Gopakumar, Adway and Sharma, Nikhil and Kass, Nicolás M. and Hurt, Griffin and Basdeo, Rishi and Don, Nicole and Lang, Michael J. and Biehl, Jacob T. and Andrews, Edward G.}, keywords = {Bypass surgery, Cerebrovascular neurosurgery, Mixed reality, Operative workflow}, } -

MR-MDEs: Exploring the Integration of Mixed Reality into Multi-display EnvironmentsGriffin J. Hurt, Talha Khan, Nicolás Matheo Kass , and 3 more authorsProc. ACM Hum.-Comput. Interact., Dec 2025

MR-MDEs: Exploring the Integration of Mixed Reality into Multi-display EnvironmentsGriffin J. Hurt, Talha Khan, Nicolás Matheo Kass , and 3 more authorsProc. ACM Hum.-Comput. Interact., Dec 2025Multi-display environments (MDEs) are applicable to both everyday and specialized tasks like cooking, appliance repair, surgery, and more. In these settings, displays are often affixed in a manner that prevent reorientation, forcing users to split their attention between multiple visual information sources. Mixed reality (MR) has the potential to transform these spaces by presenting information through virtual interfaces that are not limited by physical constraints. While MR has been explored for single-task work, its role in multi-task, information-dense environments remains relatively unexplored. Our work bridges this gap by investigating the impact of different display modalities (large screens, tablets, and MR) on performance and perception in these environments. Our study’s findings demonstrate the capability for MR to integrate into these spaces, extending traditional display technology with no impact to performance, cognitive load, or situational awareness. The study also further illustrates the nuanced relationship between performance and preference in tools used to guide task work. We provide insights toward the eventual authentic integration of MR in MDEs.

@article{10.1145/3773074, author = {Hurt, Griffin J. and Khan, Talha and Kass, Nicol\'{a}s Matheo and Tang, Anthony and Andrews, Edward and Biehl, Jacob}, title = {MR-MDEs: Exploring the Integration of Mixed Reality into Multi-display Environments}, year = {2025}, issue_date = {December 2025}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {9}, number = {8}, url = {https://doi.org/10.1145/3773074}, doi = {10.1145/3773074}, journal = {Proc. ACM Hum.-Comput. Interact.}, month = dec, articleno = {ISS017}, numpages = {22}, keywords = {Segmented displays, display replacement, multi-display environments, virtual displays}, } -

SurrealityCV: An Open-Source, Cross-Platform Unity Library for Mixed Reality Computer VisionGriffin J Hurt, Calvin Brinkman , Ethan Crosby , and 3 more authorsIn Proceedings of the 2025 ACM Symposium on Spatial User Interaction , Nov 2025

SurrealityCV: An Open-Source, Cross-Platform Unity Library for Mixed Reality Computer VisionGriffin J Hurt, Calvin Brinkman , Ethan Crosby , and 3 more authorsIn Proceedings of the 2025 ACM Symposium on Spatial User Interaction , Nov 2025Many mixed reality applications rely on computer vision to perform operations like object tracking, identification, and registration. While platform-specific solutions like OpenCV for Unity and ARKit exist, there is currently no cross-platform software library for performing these tasks across most consumer mixed reality hardware. Here, we present an open-source, cross-platform Unity library that facilitates common computer vision workflows within mixed reality applications. The package is written primarily in C++ using OpenCV as a backend, with APIs in C and C# to maximize compatibility. The library is modular, and new modules can be easily created to extend its functionality. The source code is available on GitHub at https://github.com/surreality-lab/SurrealityCV under the MIT license.

@inproceedings{10.1145/3694907.3765953, author = {Hurt, Griffin J and Brinkman, Calvin and Crosby, Ethan and Dhamale, Akshat and Andrews, Edward and Biehl, Jacob}, title = {SurrealityCV: An Open-Source, Cross-Platform Unity Library for Mixed Reality Computer Vision}, year = {2025}, month = nov, isbn = {9798400712593}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3694907.3765953}, doi = {10.1145/3694907.3765953}, booktitle = {Proceedings of the 2025 ACM Symposium on Spatial User Interaction}, articleno = {43}, numpages = {3}, keywords = {Mixed Reality, Augmented Reality, Computer Vision, Unity, Rapid Prototyping}, location = { }, series = {SUI '25}, } -

814 Evaluation of Mixed Reality for Burn Margin Visualization and Surgical PlanningChristopher Fedor , Griffin Hurt, Edward Andrews, and 2 more authorsJournal of Burn Care & Research, Apr 2025

814 Evaluation of Mixed Reality for Burn Margin Visualization and Surgical PlanningChristopher Fedor , Griffin Hurt, Edward Andrews, and 2 more authorsJournal of Burn Care & Research, Apr 2025Mixed reality (MR) allows virtual content to be merged with the physical world, enabling novel visualization affordances not available with traditional displays. These affordances are particularly beneficial in the medical domain, as surgeons can view imaging and other relevant patient data in a 3D spatial context. In burn surgery, excision and grafting are mainstay treatments for deep partial and full-thickness burns. However, identifying regions to be excised is nontrivial. Recent work has developed imaging techniques that assist surgeons in determining burn margins. In this study, we demonstrate a new application of MR for burn surgery by building and evaluating a system that overlays deep burn margins onto a simulated anatomical surface for surgeons to trace. This represents a first step towards creating an MR system that can help physicians interpret burn surface area and depth for surgical planning.

@article{fedor2025814, title = {814 Evaluation of Mixed Reality for Burn Margin Visualization and Surgical Planning}, author = {Fedor, Christopher and Hurt, Griffin and Andrews, Edward and Biehl, Jacob and Egro, Francesco}, journal = {Journal of Burn Care \& Research}, volume = {46}, number = {Supplement 1}, pages = {S266-S266}, year = {2025}, month = apr, publisher = {Oxford University Press US}, doi = {10.1093/jbcr/iraf019.345}, }

2024

-

EndovasculAR: Utility of Mixed Reality to Segment Large Displays in Surgical SettingsIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Apr 2024

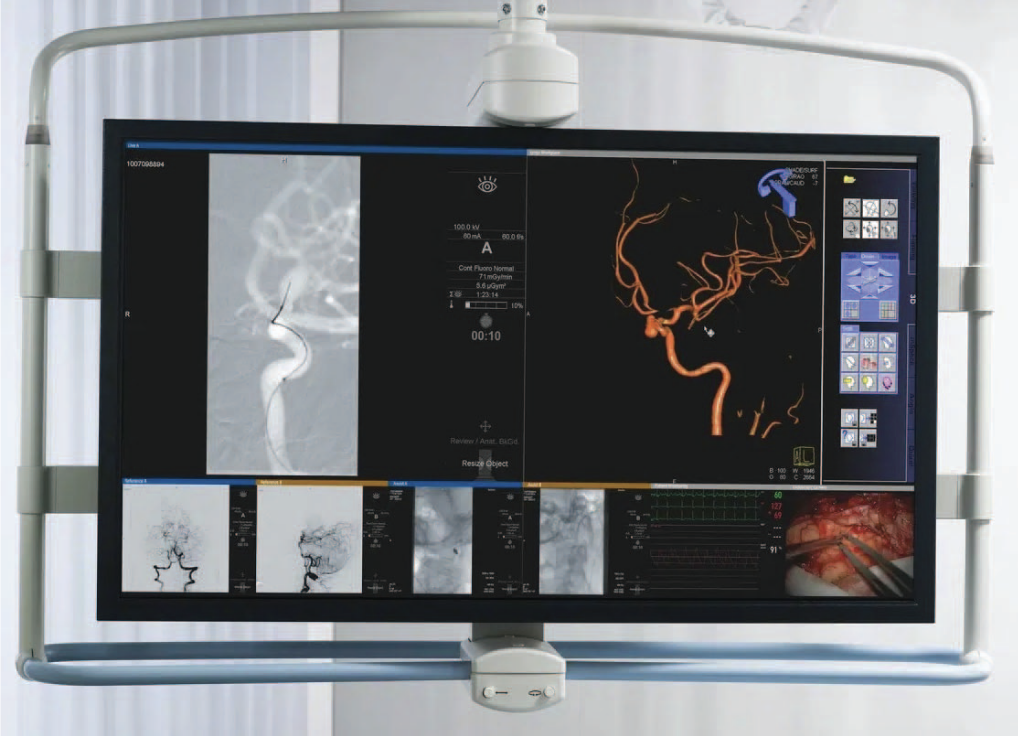

EndovasculAR: Utility of Mixed Reality to Segment Large Displays in Surgical SettingsIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Apr 2024Mixed reality (MR) holds potential for transforming endovascular surgery by enhancing information delivery. This advancement could significantly alter surgical interfaces, leading to improved patient outcomes. Our research utilizes MR technology to transform physical monitor displays inside the operating room (OR) into holographic windows. We aim to reduce cognitive load on surgeons by counteracting the split attention effect and enabling ergonomic display layouts. Our research is tackling key design challenges, including hands-free interaction, and occlusion management in densely crowded ORs. We are conducting studies to understand user behavior changes when people consult information on holographic windows compared to conventional displays.

@inproceedings{hurt2024endovascular, title = {EndovasculAR: Utility of Mixed Reality to Segment Large Displays in Surgical Settings}, author = {Hurt, Griffin J and Khan, Talha and Kann, Michael and Andrews, Edward and Biehl, Jacob}, booktitle = {2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)}, pages = {1059--1060}, year = {2024}, organization = {IEEE}, doi = {10.1109/VRW62533.2024.00326}, }